Talk:Development/Archive01

Where We Are and the Next Steps

The GUI client for CVS (Tortoise) is nice, but it's not available on the live server, so I had to research the CVS command line client. At this point I have learned enough about it (it has a truly impressive number of options and arguments) to download the current CVS baseline as well as specific revisions, tagged versions and project snapshots as of a particular date. (Tested locally as well as in a separate directory tree on the live server.) I can also diff different script versions, but that's the extent of my "CVS fu" for now. Hopefully, this is enough for our purposes and, since I have the root password for the live server, I just need to come up with a specific list of commands which need to be executed during patch installation, run them by Al and get his OK to proceed.

The issue that worries me the most right now is that, as I wrote over on the Community Portal a few hours ago, I can't find a way to associate bugs and feature requests with code changes. We also need to decide how we are going to "tag" the script revisions which have been installed on the live server (unless there is a better way of tracking code installs in CVS).

Development

I have problems with #4. Should we stop modifying scripts until their current versions are installed on the server? (I.e. 'do not touch edit/*_search.py until the last patches are on the server.') --Roglo 10:52, 1 June 2009 (UTC)

- Let's examine the following scenario. Revision 1.1 of ScriptA is installed on the live server. Revision 1.2 has been uploaded to Sourceforge, but hasn't been tested/released yet. Suppose you want to make an unrelated change to ScriptA, which will eventually become 1.3. Should you use revision 1.1 or 1.2 as your baseline? If you use 1.1, then we will have a problem with code divergence and at some point we will have to merge the two sets of changes. If you use 1.2, it may fail testing yet and then you will have to re-implement your changes using 1.1 as the baseline.

- I suspect that the best approach would be to ask the tester and the admin about the current status of 1.2 before doing anything else. If it's almost ready to be approved/released, then it would be reasonable to wait for it to be finalized/released and then use it as the baseline for subsequent changes. If it may not be tested/released for a while and your change is urgent/important, then you may want to use 1.1 as the baseline for 1.3, in which case we will need to create a "branch" for 1.2 and its author will need to merge his changes with 1.3 before it goes out.

- Please note that this is all very much "top of my hat" stuff since I don't know much about this area and may well be off. Back in the day, when you wrote a program in PDP-11 Assembly, it was your program and your headache :-) Ahasuerus 14:50, 1 June 2009 (UTC)

- Well, we already have 'bug divergence', i.e. some bugs may be seen only in the CVS version as in the live server won't be able to get that far. I wonder if we should have Groups 'Live Server' and 'Current CVS' in the Tracker, or only report there bugs seen in the live server, and the bugs in CVS report here. --Roglo 16:53, 1 June 2009 (UTC)

Installation

(see the proposed rules on the main Development page)

Good? Bad? Ugly? Ahasuerus 05:02, 31 May 2009 (UTC)

- Maybe we should use the talk page to discuss what goes here? Feel free to move this. Two thoughts: (1) We should "require" bug/feature numbers on all commits. SourceForge's CVS embeds links to the Tracker database. (2) I strongly recommend deploying whole releases instead of trying to deploy individual fixes piecemeal. The piecemeal/patch approach sooner or later will miss including something in a multi-part change. --MartyD 11:11, 31 May 2009 (UTC)

- Ad (1): I agree with the bug/feature number requirement; sorry about my last hasty commit. I don't see the embedded links though; how to get them?

- Ad (2): Applying individual fixes by checking out file versions won't work, because fixes tend to overlap. To cherry-pick them, you'd have to get individual patches from CVS and apply them to the server, which is risky and might break thing. If we are selecting, which fixes go to the server then, I think, there are two possibilities:

- (a) assume that the 'current' code in CVS in the stable version that should be installed on server and restrict commits to well-tested and approved (so I would have to upload my patch somewhere, e.g. add it to the bug report, and wait until other developers/testers test it and someone approves it officially);

- (b) assume that the 'current' versions has all the new fixes that require testing by others, and create stable branch for the tested and approved fixes. The stable branch would have to be tested again, and the server would be finally updated with it. --Roglo 13:02, 31 May 2009 (UTC)

- That means we need to move to a 'Release' philosophy. i.e. checking in changes for 4.1, while 4.0 is still in the testers hands, and 3.9 is live, close dates, and perhaps even cool release names (chuckle) Kevin 13:21, 31 May 2009 (UTC)

- Or assume that testers and developers using the latest CVS version will notice when something is broken and the server won't be updated until the code is fixed. The code from a week ago should be good if nobody reported problems until now. --Roglo 13:39, 31 May 2009 (UTC)

- There is certainly potential for overlap/conflict between scripts and revisions, so I am all for using releases, but I can't find a way to create them in Sourceforge, which is why I was wondering about using CVS "tags" instead. Perhaps my privilege level is insufficient? I'll shoot Marc an e-mail and see what can be done. Ahasuerus 14:58, 31 May 2009 (UTC)

- I think sf.net's releases are for uploading files. You would tag "REL_20090531" the file revisions that are safe to be installed together on the server and zip them and upload them to sf.net. A release can have multiple files for download (e.g. source, binaries and data) so this is a way to group them. --Roglo 15:17, 31 May 2009 (UTC)

- I have access to the Sourceforge "patch administration" screen, which lets me upload files or "add artifacts", as it calls it. Is it the same as the release administration that you mentioned above? I suppose I could put the tested versions of all "ready" Python scripts in a separate subdirectory tree on my local server and then upload them, but how would I get the whole release from Sourceforge using the CVS command line client?

- Poking around some more, it would appear that CVS "tags" should be sufficient for our purposes. Once a particular version of a script has been tested and approved for installation, we could add a tag, e.g. 2009.01. Then, once everything that we want to go into 2009.01 is ready and has been tagged appropriately, we would simply run "cvs -z3 -d:pserver:anonymous@isfdb.cvs.sourceforge.net:/cvsroot/isfdb co -r 2009.01 isfdb2" and it would install all 2009.01 changes. Or so it would appear -- I haven't been able to test this approach yet since I can't assign tags at the moment. Ahasuerus 17:21, 31 May 2009 (UTC)

- "Patch administration" screen? Isn't it part of the Tracker/Patches interface? --Roglo 17:52, 31 May 2009 (UTC)

- If you go under "Tracker -> Patches", you will see "Add new" at the top of the page, assuming that you have proper privileges. Ahasuerus 20:26, 31 May 2009 (UTC)

- I think it's just like Bugs and Feature Request but for users who created patches for the application and want to share them. Tagging is OK if you do not to get rid of some fixes where the files were later modified by other fixes. E.g. the old ISFDB code is tagged by the tarball date :) To create the tarball, I would use something like:

cvs -d:pserver:anonymous@isfdb.cvs.sourceforge.net:/cvsroot/isfdb export -r archive_2008-01-27 isfdb2 (in a new directory) to avoid having CVS subdirectories.

- --Roglo 17:52, 31 May 2009 (UTC)

- I have just tagged our first script with "r200901", but an attempt to run the export command failed:

cvs -n export -r r200901 -d /home/XYZ/test isfdb2

protocol error: is not absolute

- Any ideas? Ahasuerus 20:26, 31 May 2009 (UTC)

- If I do it without -n, it works fine.

C:\temp>cvs export -r r200901 -d isfdb2 isfdb2

cvs export: Updating isfdb2

cvs export: Updating isfdb2/biblio

cvs export: Updating isfdb2/common

cvs export: Updating isfdb2/common/tests

cvs export: Updating isfdb2/css

cvs export: Updating isfdb2/edit

cvs export: Updating isfdb2/mod

U isfdb2/mod/yv_new.py

cvs export: Updating isfdb2/rest

cvs export: Updating isfdb2/scripts

- I don't know why it's happy about that. --MartyD 21:35, 31 May 2009 (UTC)

- With -n I get

cvs [export aborted]: there is no version here; run 'cvs checkout' first. Why -n? --Roglo 22:08, 31 May 2009 (UTC)

- With -n I get

- I got that when the destination directory did not exist. Maybe -n needs the directory tree to be present, since it won't create it? --MartyD 22:47, 31 May 2009 (UTC)

(unindent)Once "-n" (i.e. the test mode) was removed, it worked fine for me as well. Thanks!

So one possible sequence of events would then be:

- (A) tag all tested and "ready to go" script versions with "r2009.01" (or "r2009-02" etc)

- (B) sign on to the live server

- (C) type "cvs export -r r200901 -d [new directory, e.g. r200901] isfdb2"

- (D) cd to "new directory", "tar -cvf archive-name.tar *"

- (E) copy archive-name.tar to the top level project directory, in this case /home/avonruff/isfdb2

- (F) While in /home/avonruff/isfdb2, type "tar -xvf archive-name.tar"

- (G) Type "make install"

These steps seem to work for me on my local server, but are there any gotchas that I am not thinking of? For example, the root account on the live server has different ssh settings, so I have to use my own account and/or "anonymous" to download Sourceforge files. Which isn't a big deal, but I am somewhat worried that there may be another "gotcha" when I run "make install". Ahasuerus 23:23, 31 May 2009 (UTC)

- The most important thing is the label needs to be on all files. I suggest you declare a "freeze". Since changes right now are all minor, a day's notice or less is probably fine, but longer notice is always good. Once the freeze occurs, put down the label on all current revisions. Announce the label to the testers, and we're all responsible to fetch that revision and do a sanity check (since presumably we've been testing along the way). If that looks ok, you lift the freeze and can deploy that label at your convenience. If something looks bad, we have the choice of fixing it and moving the label onto the new revision(s) or moving the label on those modules back to a prior revision. That would have to be considered case by case. BTW, I don't think there's anything to worry about with regard to an anonymous check-out -- that should be fine for getting a copy. --MartyD 00:21, 1 June 2009 (UTC)

- The use of "freezes" sounds good going forward and I have updated the main list accordingly. Now, my original idea was to use just one change as the guinea pig for the first patch. However, as we know, the source code that Al has in /home/avonruff/isfdb2 doesn't match what's in the cgi directories, so it may be safer to deploy all of those changes as part of r2009-01 as well. That would necessitate deploying a few other fixes since some of the scripts, e.g. pl.py, were modified prior to the incorporation of the live changes, but it should still be manageable. Does this sound reasonable? Ahasuerus 00:53, 1 June 2009 (UTC)

- No guts, no glory! --MartyD 01:07, 1 June 2009 (UTC)

- That's what a hot-headed developer would say, wouldn't he? However, I am supposed to be wearing the system administrator's hat here and aren't they supposed to be the ultra-cautious conservative types? I have already ordered two boxes of buttons that read "Easy Does It!", "One Step at a Time!", "Keep Cool with Coolidge!" and "Incremental Progress Beats Immediate Disaster!" and I am not sending them back! Ahasuerus 01:28, 1 June 2009 (UTC)

- You've got the easy recourse of reverting to the previously installed tree. And we have several people demonstrating that a clean fetch-and-deploy produces a working system. There's probably more risk in trying to cherry-pick changes than there is in deploying the collected set of fixes. --MartyD 01:07, 1 June 2009 (UTC)

- Admittedly, a clean fetch-and-deploy will likely produce a working system, but we haven't fully tested it yet and starting with what would be effectively a full system restore wouldn't be an auspicious start anyway. The next step is to get Al to take a look at all of this and get his blessing/opinion. Then it's off to the races... (And thanks to everyone who got us this far!) Ahasuerus 01:28, 1 June 2009 (UTC)

- IIRC correctly, you can use tags for both individual fixes that might need several modules to change simultaneously, and for tagging an entire system release. I think I've deployed emergency fixes by fix tag while still working on a major release where we decided we might as well refresh everything. So I'd say it's still possible to test with one small fix (just check the dependencies, choose something that didn't differ between CVS and the live server anyway). Or tag everything currently in CVS, test that in its entirety and don't look at any new check-ins unless related to fixing that release, in which case tag just those new fixes with the full release tag. We seem to have created a lot of changes already so you could even cherry-pick several fixes, if the dependencies are worked out, test that set as "r200901" and then leave the rest (or another subset) to "r200902". I'm beginning to get a bit worried that we're not testing everything committed so far, but some fixes are being tested more than others. A freeze on checkins increases the likelihood that developers forget what they did by the time they come to commit, but it would of course make me look far more productive than anyone else! (So far.) ;-) BLongley 19:02, 1 June 2009 (UTC)

Prioritisation

This might be a missing step. It's probably OK while we're choosing and fixing small pieces within our level of knowledge of the application and technical abilities, but at some point it would be better if we knew we had a willing tester before spending time on something, and when we get to really big changes this will become really important. Also, some of the controversial features or fixes may encounter opposition to implementation: e.g. ISFDB:Moderator_noticeboard#Verification_Reference_List. (No biggy this time, I take it as a good practice.) Who is deciding on WONT FIX bugs or DENIED Feature requests? BLongley 18:32, 5 June 2009 (UTC)

"[moved the answer to the Community Portal since it affects everyone, not just the development team.] Ahasuerus

Data-Only Fixes

The example above (Reference Sources) can be worked around by adjusting two rows of data and inserting a few more for additional reference sources. I can supply the SQL for such if people decide on the additional references, but can such be run directly against the live database or do we need to put them in a one-off python script (presumably such go in the isfdb2/scripts directory, like scripts/create_user.py)? I'd rather not have to wrap up the SQL and relearn CVS ADD if it's unnecessary, I'm happier with my SQL than my Python. Another example would be the SQL to fix REVIEWS that have missing reviewed authors. BLongley 18:42, 5 June 2009 (UTC)

- I have direct access to the MySQL command line client and should be able to run MySQL scripts using the "source" command. Naturally, we'll want to test them thoroughly or else risk making an even bigger mess of things. Ahasuerus 20:02, 5 June 2009 (UTC)

- Good news. How do you want them submitted for testing? Use CVS to add SQL scripts somewhere (if so, where?) or just leave them in Sourceforge comments as I've done so far? BLongley 21:08, 5 June 2009 (UTC)

- SQL scripts need to have higher visibility in case we need to go back and figure out how the database ended up in its current state, so CVS sounds like a good place for them. Ahasuerus 22:54, 5 June 2009 (UTC)

- OK, five scripts added for perusal. The last needs discussion about how many Primary Verifications we want to allow, and to confirm Bleiler78 and OCLC are still wanted. BLongley 18:11, 6 June 2009 (UTC)

- There's no bug number for the last yet as Sourceforge won't let me raise one anymore - it's complaining I'm not setting the Group ID. Never needed to before, and it still complains if I set it? BLongley 18:11, 6 June 2009 (UTC)

Database Changes

Possibly the most dangerous of the lot. For example, there's a feature request to extend the length of the page count field. In some databases, changing the length of the field wipes out existing data (I haven't yet checked whether this is the case in MySQL). But there's also been offers to add some database constraints which would prevent data corruption (although if we do that at the database level it might lead to more nasty error messages than if we caught them in the Python apps), and suggestions to add indexes to improve some query performances (although adding indexes tends to slow INSERTs, and initial build can severely affect query performances or even prevent execution while happening). I'm not sure if you can wrap DDL (as opposed to DML mentioned in the last section) in Python scripts, whether we can run DDL as plain SQL, and I'm not sure how to get approval for such dangerous actions. Any comments? BLongley 18:59, 5 June 2009 (UTC)

- Adding indexes shouldn't be too bad except for the performance aspect. We could even take the database off-line while the re-indexing process runs. I expect that we will want to have a month or two of steady and uneventful software changes and patches under our belts before we start tackling database changes. Ahasuerus 20:08, 5 June 2009 (UTC)

- That's why I left it till last. I know that one of my proposed changes (fixing all the broken advanced publication searches at once) introduces the next level of problems with performance, but we can break that down into smaller parts if wanted. BLongley 21:12, 5 June 2009 (UTC)

- By the way, at one point Al said that he wasn't fixing Advanced Search errors because he had plans to do a complete rewrite of that area. It may be worthwhile to ask him what he had in mind (some kind of full text indexing?) in case it obviates our projected changes. Ahasuerus 22:57, 5 June 2009 (UTC)

SourceForge Tools Improvements

Moved from Development to Talk Kevin 04:13, 9 June 2009 (UTC)

Could someone with the proper access please add some 'Category' choices for bug reports. Some likely choices include 'Moderator Interface', 'Editor Interface', 'Search Behavior', 'Search Results Display', 'Biblio Display', 'Title Display', 'Publication Display', 'Main Page Display', 'General Interface' etc. I'm not saying that we are going to have enough developers to concentrate in one area alone, but often a mini project might allow a developer to hit many birds with one stone/check in, /if/ the requests are roughly categorized. Thanks Kevin 13:38, 31 May 2009 (UTC)

- New categories updated. There is some overlap with existing categories, but it should be manageable. Ahasuerus 15:01, 31 May 2009 (UTC)

- Could we also please have some categories for 'Feature Requests'? Kevin 16:13, 31 May 2009 (UTC)

- Done. Also, some bugs have been moved to more appropriate categories, but there is still a fair amount of work to be done in that area. Ahasuerus 17:03, 31 May 2009 (UTC)

Test Results

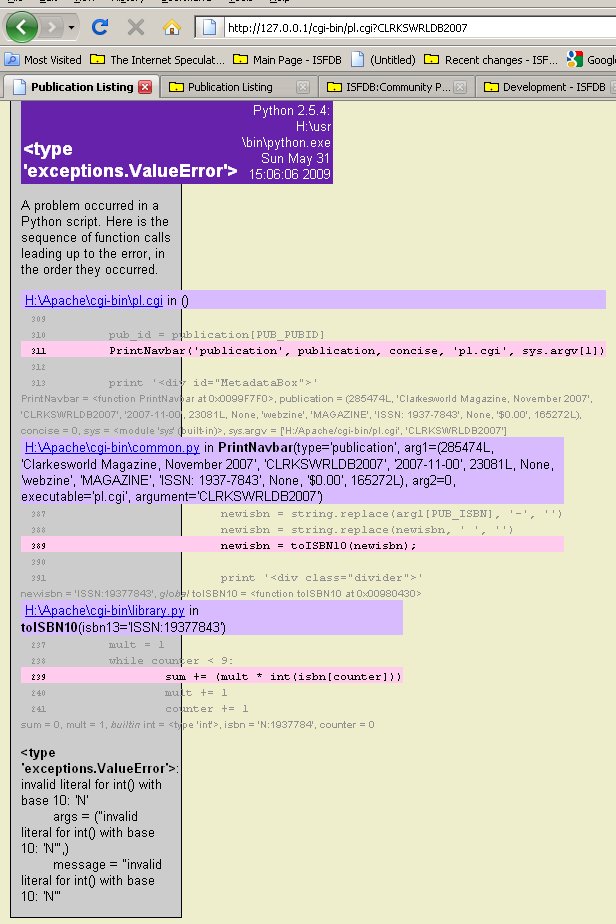

Bug 2271738

I've managed to break the new code already. :-( Bug ID 2271738 "ISBN13 does not link to Amazon and other sites" introduces a new problem when looking at publications which have valid ISSNs. PrintNavBar in biblio/common.py needs more validation before attempting to convert ISBNs.BLongley 14:33, 31 May 2009 (UTC)

- I added an exception trap in toISBN10 (and a more explicit length check), which will take care of the immediate problem. It still doesn't handle the case of leading 979 or anything else that won't produce a matching ISBN10 despite a "successful" conversion. 979 is a big can of worms, since the assumption of 978 on ISBN13's is sprinkled throughout the code. --MartyD 16:30, 31 May 2009 (UTC)

Bug 2803315

Bug 2803315 (parse error on rejected edits page )

I see what looks like part of a months-old edit of mine at the top of the "My Rejected Edits" page. The text displays:

parse error on: 266230 DESiegel60 Whispers #6-7, June 1975 Special Weird Tales issue.

temp: incomplete Supplement (Whispers #6-7) Stuart David Schiff 1975-06-00 SHORTFICTION sf

-DES Talk 05:46, 9 June 2009 (UTC)

- Yup, same here. I suppose this area is particularly hard to test since the publicly available backup file doesn't include the submissions. I'll poke around tonight using the full backup. Ahasuerus 17:24, 9 June 2009 (UTC)

- I've an example too, if needed:

parse error on: 82286 BLongley The Monkey’s Paw http://en.wikipedia.org/wiki/The_Monkey&apost;s_Paw

- BLongley 17:52, 9 June 2009 (UTC)

- This specific error is because of the bad entity ("&apost;" is not a valid entity -- it should be "'". It is causing the XML parsing of the submission (which is only represented as XML, not as data elements) to fail while building the table. The same thing is probably going on in the first example, except the text doesn't show us enough of the XML to be able to tell. There are several open bugs about bad things in the XML causing these sorts of problems. They are not new, and they occur because the raw text does not get encoded while it lives in XML form. --MartyD 00:58, 10 June 2009 (UTC)

- BTW, I saw this error some months ago, so it's not new. Ahasuerus 18:12, 9 June 2009 (UTC)

- This is one of the big gaps we need to resolve. The more our editors learn about ISFDB, the more likely they are to become a moderator. The better they moderate, the better they understand the underlying problems. The better they understand those, the more likely they are to become people that will try to fix them by learning the software. But by the time we've got to that stage we've probably forgotten a lot about what we saw and what we did when we couldn't approve stuff. At work, I solve this disconnect by letting users of any level try out new proposals on a test system. It seems unreasonable to demand a huge level of experience or knowledge to get feedback from "the common man (or woman)" so I guess I'm asking Ahasuerus whether we can implement some sort of publically available test-area for testers that don't know moderator screens, can't install MySQL (let alone ISFDB) and certainly haven't got a local Wiki setup? BLongley 22:39, 9 June 2009 (UTC)

- Distributed proofreaders (the feeder for PG) runs a separate test system, at a different web address which anyone can (with approval) create an account on and experiment with. The test system usually has updates loaded to it some time before they go live. For major updates this allows any user to see and test and give design and UI feedback without needing to implement a local system. Perhaps we should do the same, say at test.isfdb.org? If we have the space and bandwidth for such a thing. A test system could have a much smaller db of course, unless we are testing performance issues. -DES Talk 22:51, 9 June 2009 (UTC)

- A test database would be nice since it would allow less computer savvy people like Michael and Bluesman, who may not be in a position to install the software locally, to test changes. However, I don't want to set it up on the live server because my understanding of many of the moving parts involved, especially as it relates to Wiki software, is tenuous and at some point I would likely screw up and kill the main database while trying to do something with the test database. ("Let's see... Drop all tables... Hm, which database was I connected to again?") I guess we can either wait until I become more comfortable with the server setup, which may take months, or we can set up a separate test server. Volunteers? :) Ahasuerus 23:14, 9 June 2009 (UTC)

- I've been thinking along those lines...but the servers I have on the web are linux, not windows, so the setup won't be the same. No Promises...maybe in a week or two I'll see if I can setup isfdb.pulliamcomputers.com Kevin 00:01, 10 June 2009 (UTC)

- And now I'm unvolunteering. My shared server has no shell access, the web SQL Interface is choking on the ISFDB backup, and the query interface has been nerfed so I can't execute an import command directly. Brick Wall. Kevin 01:45, 10 June 2009 (UTC)

- Don't panic! We may find another volunteer yet :) Ahasuerus 02:10, 10 June 2009 (UTC)

I discovered while working on bug 2803321 that some of the moderator pages handle this error differently: they trap the exception and do nothing, then change the "Subject" to XML PARSE ERROR (since the subject can't be extracted anyway due to the parse error). Any objections to my propagating that treatment to the non-moderator version of the display? --MartyD 16:29, 17 June 2009 (UTC)

- I'm not quite clear what the effect would be from a user PoV of this solution, either for moderators such as myself, or for non-mods. Could you elaborate on what this would look like, or link to an example? -DES Talk 16:33, 17 June 2009 (UTC)

- Instead of getting the "

parse error on 266230 DESiegel60 Whispers #6-7..." scrawled across the top of the display, you'd get a normal-looking display where the table looks like:

- Instead of getting the "

Date/Time Type Reviewer Subject Reason 2009-06-09 20:53:22 TitleUpdate MartyD XML PARSE ERROR completely unacceptable on all counts

- which is how the moderator "recent" and pending submission lists already do it. --MartyD 17:05, 17 June 2009 (UTC)

- Sounds good, bug 2803315 assigned to Marty! :) Ahasuerus 17:31, 17 June 2009 (UTC)

- I checked in this change this morning. Let me know if you see any problems. --MartyD 13:01, 18 June 2009 (UTC)

Bug 2803321

Bug 2803321 My Pending Edits issues. Recent enhancements to the display seem to have lost the submission timestamp, and added a link to User talk:UNKNOWN, which is not helpful. -DES Talk 05:45, 9 June 2009 (UTC)

- It looks like the error is in SQLgetUserName, which lives in common/SQLparsing.py, which was, in turn, modified by Marty for r2009-02. I will send it to Marty for review - thanks! Ahasuerus 17:47, 9 June 2009 (UTC)

- I think the UNKNOWN is because the live users aren't in the backup, and unless you've created dummy ones there's a lot of places where we have no data to show. BLongley 17:57, 9 June 2009 (UTC)

- Ah, I see you're talking about the live one. Not good. :-( BLongley 18:05, 9 June 2009 (UTC)

- Actually, I don't think this is new. It's not the submission timestamp, it's the review timestamp, which isn't set on pending edits, just approved or rejected ones. The name of the reviewer is also null for pending edits, hence the unknown. I suspect my fix for Feature 2799074 will help, as it at least looks to see if it's been held by somebody. But someone could request a change to use submission timestamp instead of review timestamp for this version of the screen if wanted. BLongley 18:30, 9 June 2009 (UTC)

- I recall from about a year ago -- before I became a mod -- that I used to check pending edits fairly often. Unless my memory is in error, it then displayed submission timestamps. If it never did, than IMO it would be a useful albeit minor enhancement if it were to do so in future. -DES Talk 21:32, 9 June 2009 (UTC)

- 45 days ago, it used to show the submission time stamp. If it doesn't now, then it's a new bug. Kevin 22:26, 9 June 2009 (UTC)

- It doesn't for me. Check it for yourself by submitting a test edit or three, and not approving it/them, then visit My Pending Edits. -DES Talk 22:47, 9 June 2009 (UTC)

- That's kind of the point that it's a new bug as in a previously present feature which has now gone away. (chuckle) Kevin 23:10, 9 June 2009 (UTC)

- Ahh I see now you were just telling me to confirm it for myself... Naah. Why duplicate effort. I believe you. Kevin 23:12, 9 June 2009 (UTC)

- Sorry, but I don't think it's a new bug. I restored the backup of the live ISFDB tree that Ahasuerus did before he deployed any of our source changes, and the behavior exists there, too. The two pieces of code responsible for this had no relevant changes. The selection out of the database is the same as it has been: "select * from submissions..." and the date column has been, and continues to be, filled from [5], the sixth column, which is the reviewed date. I also tried it on existing and new submissions, and both behave the same way (and I checked the submission insertions, and they have not changed). --MartyD 00:50, 10 June 2009 (UTC)

- (shakes head) - Could have sworn I could see submission timestamps on my pending edits 2 months ago. (shrug) - Oh well. Kevin 01:17, 10 June 2009 (UTC)

- Well, it's conveniently been logged and assigned to me, and I do know exactly the right spot to fix it, and it's an awfully easy fix.... Perhaps it will go away soon. BTW, I seem to think I saw dates, too, but who knows. Eyewitnesses are so unreliable. --MartyD 01:28, 10 June 2009 (UTC)

- "It's a conspiracy I tell ya!" Kevin 02:14, 10 June 2009 (UTC)

- Change checked in this morning. For any of the "recent" views, the submission date/time will be shown if there is no reviewed date/time. BTW, the moderator submission list was always showing the submission time. Maybe that is where the feeling of having seen dates comes from? Dunno. Anyway, let me know if you see any problems. --MartyD 13:03, 18 June 2009 (UTC)

- "It's a conspiracy I tell ya!" Kevin 02:14, 10 June 2009 (UTC)

- Well, it's conveniently been logged and assigned to me, and I do know exactly the right spot to fix it, and it's an awfully easy fix.... Perhaps it will go away soon. BTW, I seem to think I saw dates, too, but who knows. Eyewitnesses are so unreliable. --MartyD 01:28, 10 June 2009 (UTC)

- (shakes head) - Could have sworn I could see submission timestamps on my pending edits 2 months ago. (shrug) - Oh well. Kevin 01:17, 10 June 2009 (UTC)

- Sorry, but I don't think it's a new bug. I restored the backup of the live ISFDB tree that Ahasuerus did before he deployed any of our source changes, and the behavior exists there, too. The two pieces of code responsible for this had no relevant changes. The selection out of the database is the same as it has been: "select * from submissions..." and the date column has been, and continues to be, filled from [5], the sixth column, which is the reviewed date. I also tried it on existing and new submissions, and both behave the same way (and I checked the submission insertions, and they have not changed). --MartyD 00:50, 10 June 2009 (UTC)

- Ahh I see now you were just telling me to confirm it for myself... Naah. Why duplicate effort. I believe you. Kevin 23:12, 9 June 2009 (UTC)

- That's kind of the point that it's a new bug as in a previously present feature which has now gone away. (chuckle) Kevin 23:10, 9 June 2009 (UTC)

- It doesn't for me. Check it for yourself by submitting a test edit or three, and not approving it/them, then visit My Pending Edits. -DES Talk 22:47, 9 June 2009 (UTC)

- 45 days ago, it used to show the submission time stamp. If it doesn't now, then it's a new bug. Kevin 22:26, 9 June 2009 (UTC)

- I recall from about a year ago -- before I became a mod -- that I used to check pending edits fairly often. Unless my memory is in error, it then displayed submission timestamps. If it never did, than IMO it would be a useful albeit minor enhancement if it were to do so in future. -DES Talk 21:32, 9 June 2009 (UTC)

- Actually, I don't think this is new. It's not the submission timestamp, it's the review timestamp, which isn't set on pending edits, just approved or rejected ones. The name of the reviewer is also null for pending edits, hence the unknown. I suspect my fix for Feature 2799074 will help, as it at least looks to see if it's been held by somebody. But someone could request a change to use submission timestamp instead of review timestamp for this version of the screen if wanted. BLongley 18:30, 9 June 2009 (UTC)

Sourceforge troubles?

I have put r2009-04 together and will start testing shortly, but I seem to be unable to do "view" or "diff" on Sourceforge. I am getting a blank screen and the page source shows Python errors. Could someone else please confirm this behavior before we go bugging Sourceforge admins? TIA! Ahasuerus 02:39, 16 June 2009 (UTC)

- (download) and (as text) work for me. On (view) and (annotate) I get a blank page. With Diff to previous for some files I get blank and for some (aa_search.py, the two last patches - cached somewhere?) colored diff. There is a message about problems with ViewVC on Sourceforge blog but I'm using Firefox and still getting a blank. --Roglo 06:02, 16 June 2009 (UTC)

- Yes, same problem here: Python errors ending "UnknownReference: 0" on a blank page when trying a diff. BLongley 17:42, 16 June 2009 (UTC)

- Looks like it has been fixed -- thanks for checking! Ahasuerus 01:44, 17 June 2009 (UTC)

Sourceforge instability

According to Sourceforge:

On Tuesday 2009-06-30 at 16:00 UTC, we will be testing the first phase of our new consumer (user) pages.

During this testing, you may encounter some instability with the site. Deploying these changes involves major architectural adjustments. Instability is expected to last no more than a few minutes at a time.

Once our testing has completed (and any immediate issues have been resolved), we will be reverting to the prior version of the site. Once the site is "back to normal" on the old code, we'll be doing our final planning for the launch of the new consumer experience. We tentatively plan this launch to occur on Wednesday 2009-07-01.

Ahasuerus 13:02, 30 June 2009 (UTC)

- Well, that explains the current "500 Internal Server Error", "nginx/0.7.60" I'm getting. BLongley 18:05, 30 June 2009 (UTC)

Advanced Search and Unicode

Advance Publication search on strings which include "£" is broke - copied from Bill's Talk page.

A search on "Price=£3.99" generated the following query:

select pubs.* from pubs where pubs.pub_price='\xa33.99' order by pubs.pub_title limit 100

which suggests that this may be a Unicode problem. Will keep digging - might as well teach myself a bit more Python :) Ahasuerus 18:20, 5 July 2009 (UTC)

- It turns out that the culprit is "repr" in escape_quotes in isfdblib.py. Here is what happens:

str = ' £3.99 ' print str £3.99 print repr(str) ' \xa33.99 '

- Apparently, this behavior was changed in a recent (2008) Python 3.0 patch, but there is no way to change Python 2.x because 2.x's strings are non-Unicode and because of backward compatibility concerns. The next question, then, is what does "repr" do for us that makes it required in this case? According to the documentation, it "[r]eturn a string containing a printable representation of an object. This is the same value yielded by conversions (reverse quotes)." A little experimentation suggests that it preserves substrings like "\t" and "\n" which would be otherwise converted to non-printable characters. This is nice, but (a) is it really valuable in our world and (b) can we accomplish the same thing using some other method that doesn't destroy Unicode characters? Ahasuerus 19:30, 5 July 2009 (UTC)

repralso escapes single quotes:

>>> repr("ab'c'de")

'"ab\'c\'de"'

- Perhaps it is used for escaping string used to build SQL query? There is no

db.escape_string()inMakeSQLterm. Perhaps it should be used instead of this function? --Roglo 20:14, 5 July 2009 (UTC)

- Perhaps it is used for escaping string used to build SQL query? There is no

- After running a few more tests, it looks like all searches on Unicode characters fail and some "succeed" in bizarre ways, e.g. an Advanced Title Search on "Title contains 42" returns "Возвращение в Мир Смерти" and a search on "43" returns "Εἰς Σφίγγην" :-\. I think it's safe to say that this is a system-wide problem and needs to be addressed system-wide. I will test Bill's modifications as they currently exist (plus my fix for the dollar sign and other escaped characters) and we will need to log a Bug re: Unicode searches although I seem to recall that one already exists. Ahasuerus 03:07, 7 July 2009 (UTC)

Local copy auto-update

My local copy of the database apparently decided to auto(?)-update (at least some files) to what's in Sourceforge. There will be a slight delay as I rebuild the lego house. Ahasuerus 04:39, 10 July 2009 (UTC)

Reviewed Author searches

I have been testing Feature 2799065, "Add Reviewed Author Search", as well as "USE INDEX with publication searches by author", which affect the same scripts. The good news is that the addition of USE INDEX made Advanced Title searches by Author almost instantaneous, but the bad news is that the newly implemented searches on Reviewed Authors take well 15-20 seconds on my system. Here is the SQL statement that is displayed at the top of the page:

QUERY: select titles.* from titles where titles.title_id in (SELECT t1.title_id FROM titles t1, canonical_author ca1, authors a1 WHERE t1.title_ttype='REVIEW' AND t1.title_id=ca1.title_id AND ca1.ca_status=3 AND ca1.author_id=a1.author_id AND a1.author_canonical LIKE'%bester%') order by titles.title_title limit 100

Any suggestions? I am fairly worthless development-wise at the moment due to some kind of flu (?), but I can still test, although slowly. Ahasuerus 19:34, 11 July 2009 (UTC)

- This either is, or is like, one of the queries we discussed elsewhere before that needs to be rewritten as a join instead of using "select ... where ... in (select ...)". I don't remember what became of that discussion. --MartyD 20:48, 11 July 2009 (UTC)

- Give this to Roglo again, when he's back? His changes only affected "Author" searches, not "Reviewed Author". I'd have a look at it but a quick "copy what Roglo did" just made it so much worse that I thought my machine had locked up. (Who let amateurs like me loose on this stuff anyway?) BLongley 20:57, 11 July 2009 (UTC)

- It turns out the inner select takes a good chunk of time. I'll take a look at it. SQL and I are long-time acquaintances. --MartyD 10:43, 12 July 2009 (UTC)

- Let me know if this performs any better than the above query on your systems:

- select titles.* from titles, canonical_author ca1, (select author_id from authors a where a.author_canonical LIKE '%bester%') a1 where titles.title_ttype='REVIEW' and titles.title_id = ca1.title_id and ca1.ca_status = 3 and ca1.author_id = a1.author_id order by titles.title_title limit 100;

- It's virtually instantaneous on my system in a newly-started MySQL, but I'm not 100% convinced there isn't some caching going on.... --MartyD 12:14, 12 July 2009 (UTC)

- That takes 4 seconds rather than 31 here. BLongley 13:35, 12 July 2009 (UTC)

- About 5 when incorporated in the ISFDB code - good enough for me! Thanks for looking at it. BLongley 19:45, 12 July 2009 (UTC)

Links from ISFDB to the Wiki

There are two types of links from the ISFDB proper to the ISFDB Wiki at this point. One type is what you see on the main moderator (aka "new submissions") page. It links to the locally installed version of the Wiki, i.e. "www.isfdb.org" on the live server but "127.0.0.1" on a local server, so local links are similar to 127.0.0.1/wiki/index.php/User:Root and 127.0.0.1/wiki/index.php/User_talk:Root. The other type is hardcoded links which always read isfdb.org/wiki/index.php/User:Root and isfdb.org/wiki/index.php/User_talk:Root regardless of what the local installation says. These differences have no impact on the live server -- at least as long as our software resides on www.isfdb.org -- but we need to decide how we want the software to behave for developers.

As far as I can see, there are two main arguments in favor of linking to the local version of the Wiki software. First, we have been forced to move from host to host a number of times and although we have always kept the domain name and the site structure, it's possible (although probably unlikely) that the next move may be to a host with different Wiki policies, which would necessitate changes to links and wreak havoc with our database-Wiki linkage.

Second, those of us who have a local copy of the Wiki software installed and who use it to test changes which affect the Wiki side -- e.g. see Marty's recent work on notifying users when they have new messages -- need to be able to connect from the local version of ISFDB to the local version of the Wiki.

On the other hand, Bill reports that he finds the hardcoded "www.isfdb.org" links useful when testing his changes locally. I am not sure I fully understand how he uses them, so I will "yield the balance of my time time to the gentleman from England" :) Ahasuerus 22:47, 12 July 2009 (UTC)

- We could add to localdefs.py another constant: WIKIHOST and then use that in place of HTMLHOST / "www.isfdb.org" wherever a link to the wiki is generated. Then any configuration can be configured either way. If there are no objections, I can go do that. (BTW, I noticed I used HTMLLOC instead of HTMLHOST in the "My Messages" change -- I will go fix that now.... --MartyD 10:06, 13 July 2009 (UTC)

- I like that solution. It will be a largish update impacting a ton of files but it's a 'one and done' kind of fix. I vote yes for approval and yes for implimentation. Kevin 13:17, 13 July 2009 (UTC)

- Hmmm... Maybe WIKILOC, with the path in it, would be even better. --MartyD 15:05, 13 July 2009 (UTC)

- Sure, that should work. As far as "impacting a ton of files" goes, I don't think we have that many links between Python scripts and the Wiki (yet) and, besides, we don't have to change all links at the same time. Might as well start now while the number of links is still low. Ahasuerus 16:52, 13 July 2009 (UTC)

- I've no preference between WIKILOC or WIKIHOST, but I think another localdefs.py is the right way to go. If anybody has figured out what each of the other names is supposed to be used for, I'd like to see those documented as well. BLongley 17:48, 13 July 2009 (UTC)

- If WIKILOC = "www.isfdb.org/wiki" suits everybody I'll start making some changes based on that. BLongley 18:37, 14 July 2009 (UTC)

- I interpret the above as violent agreement among those voicing an opinion.... --MartyD 18:48, 14 July 2009 (UTC)

- I hope so, as I went nuts. ;-) BLongley 20:12, 14 July 2009 (UTC)

- Hm, if we were squirrels, it looks like we would be all set for the winter :) Ahasuerus 00:11, 15 July 2009 (UTC)

- For my next set of Berserker coding (don't worry, it's not going to be tonight), I think I'd like to roll out Feature 2816520 to a few more submission types. (For instance, "Series Update" and "Remove Titles from this Pub" and "Make Variant" and "Title Merge" have all annoyed me tonight.) I don't think such are too controversial (they're additions rather than changes) but that FR has been closed. Do we need to open another and discuss all the possibles to death? BLongley 21:54, 15 July 2009 (UTC)

- See FR #2822160 More choices for moderator after approval. I don't see that extended discussion is needed. -DES Talk 22:23, 15 July 2009 (UTC)

- Seems straightforward, non-controversial and desirable, so assigning to Bill. Ahasuerus 22:45, 15 July 2009 (UTC)

Fixing pseudonymous reviews and interviews

I have used the logic from Bill's "scripts/fix_missing_interviewees.sql" to identify and manually clean up all Interview Title records with blank "interviewee" Author fields. There were only 22 of them, including a peculiar case of "mutual interviewing", so it didn't take long and we don't need to run that script any more.

Unfortunately, we have over 1,000 Review records with blank "reviewee" fields, so we will need to run the Review-specific script. It has been code reviewed and will be applied later this week when r2009-11 is installed. (r2009-10 will be a small patch to implement WIKILOC and one sample script.) Ahasuerus 02:26, 16 July 2009 (UTC)

Python question

My knowledge of Python is quite limited, but I can usually get simple things done by beating my head against the wall until the wall cracks. However, since we have at least a few people who know Python (Al, Marty, and Roglo), I might as well ask here before activating my SOP.

I am trying to prevent duplicate Canonical Author names from being generated. I have added a check (adapted from the code in biblio/se.py) which seems to work fine in most cases:

if tag == 'Canonical':

results = SQLFindAuthors(newField)

print results

if results:

print "Error: Canonical name '%s' already exists, duplicates are not allowed." % (newField)

PrintPostSearch(0, 0, 0, 0, 0)

sys.exit(0)

However, if the entered Canonical name contains an apostrophe, the SQL lookup fails to find existing authors and the entered duplicate name gets through. This is surprising since the same code handles apostrophes correctly in biblio/se.py, so I assume that I am missing something.

I have added the following debugging code:

print newField for letter in newField: print letter," "

which displayed the following results for "Brian O'Brien":

Brian O’Brien B r i a n O & r s q u o ; B r i e n

(Note that the apostrophe in the output line is different.) I assume that this has something to do with the apostrophe conversion problem that Al had a few years ago, but I don't know how he fixed it, so any guidance would be appreciated. TIA! Ahasuerus 02:18, 27 July 2009 (UTC)

- At what point are you trying to add this? When an editor tries to edit an author (edit/submitauth.py), when a moderator looks at it (mod/av_update.py), or when a moderator approves it (mod/aa_update.py)? Or more than one place? BLongley 18:28, 27 July 2009 (UTC)

- Sorry, I thought I mentioned that it was edit/submitauth.py, but apparently not :( Ahasuerus 19:03, 27 July 2009 (UTC)

- And while playing around with Mr O'Brien's surname, I notice that one of the bibliography displays still breaks. "Summary", "Alphabetical", "Chronological" work, "Awards" doesn't. A comparison of those might provide some clue as to where things have been fixed and where they haven't been. BLongley 18:44, 27 July 2009 (UTC)

- Good point, I'll play with them tonight! Ahasuerus 19:03, 27 July 2009 (UTC)

- Once I found the right version of the SQLparsing file, I was able to fix the Award problem (Bug 2828187), but still no luck with the canonical name check. Oh well, there is always tomorrow... Ahasuerus 03:05, 28 July 2009 (UTC)

- I think I've found it. At the point you're checking it, the data has been XMLescaped to make sure it passes through all the XML stages. It will be XMLunescaped before the final update is done two modules later. If you change

results = SQLFindAuthors(newField)

- to

newField2 = XMLunescape(newField) results = SQLFindAuthors(newField2)

- then you'll be comparing like for like and the look-up works. BLongley 18:38, 28 July 2009 (UTC)

- Looks very promising, I'll try it tonight, thanks! :) Ahasuerus 19:43, 28 July 2009 (UTC)

- And there was much rejoicing! Thanks again! :-) Ahasuerus 00:59, 29 July 2009 (UTC)

Testing

With Marty and Roglo on vacation, there hasn't been much need for testing/deployment lately, which left me with some free time to work on development of my own. I have made 6 changes so far (see the main Development page) and they are ready to go, but I would feel better if they were cross-tested by someone else. Any volunteers? :) Ahasuerus 01:32, 29 July 2009 (UTC)

- I'll have a look if nobody else will: I can't promise a quick response though. I'm feeling particularly unhealthy at the moment, but don't know if it's just my bad habits, swine flu, an allergic reaction to PCI-DSS audits, or something else. If the body is weak but the mind stays strong, moving a few electrons around is fine. If I get a proper fever, then I should avoid work here, lest you discover rejections like "Cthulhu has forbidden such edits!" or "You named the one that should not be named". (Which one is that? And can you tell what I'm reading now? ) BLongley 22:42, 29 July 2009 (UTC)

- Yes, but if I were to name Hast... er, I mean "that entity", I might become a mad monk or something along those lines. On second thought... Ahasuerus 23:01, 29 July 2009 (UTC)

- P.S. Don't worry about testing if you are not feeling well. I plan to do a local reinstall and more testing tonight, so hopefully it should catch any issues. Ahasuerus 23:02, 29 July 2009 (UTC)

Serials and Lexical Match

As per the results of the recent "Serials and Lexical Match" discussion on the Community Portal,FR 2823387 has been created. At this time it reads:

Eliminate the "lexical match logic" by making Serials into VTs of their parent Novel/Shortfiction Titles.

A. Change the Summary Bibliography and Title page behavior so that Serial VTs would appear under "Magazine/Anthology Appearances:" rather than under "Variant Title:". (See the way Heinlein's Starship Troopers/Starship Soldier currently appears.)

B. Identify and manually review/adjust the 300+ Serial records that are currently set up as VTs. Delete all fake Novel/Shortfiction Titles that have been set up to support the partial match logic.

C. Create a script that will find all Serial records that are currently NOT set up as VTs. For each one, check if there is a matching Novel/Shortfiction Title with the same Author(s). If there are 2+ matches, identify them as exceptions and generate a list which will need to be manually reviewed. If there is exactly one match, then automatically create a VT relationship between the Serial record(s) and the matching Novel/Shortfiction record.

D. Eliminate the lexical match logic on the Summary Bibliography page and on the Title page.

Additional proposed change:

E. Get rid of the word "Anthology" in "Magazine/Anthology Appearances" since we generally don't use Serials in Anthologies.

Note:

At this time there are three Summary Bibliography scenarios as far as Serials are concerned:

- If there is a (lexically) matching Novel Title, then the matching Serial records appear under that Novel Title only and not in the Serial section.

- If there is a matching Shortfiction Title, then the matching Serial records are displayed twice, once under that Shortfiction Title and once in the Serial section.

- If there is no lexically matching Title, then the "orphan" Serial records are displayed in the Serial section. Note that these "orphan" records may be actually related to an existing Novel or Shortfiction Title, but there is no way to link the two if the book appearance used a different title. Well, except for a few experimental attempts to link them, that is. (See, e.g., Starship Troopers vs. Starship Soldier on Heinlein's Summary page.)

If we implement the proposed change, then scenarios 1 and 2 will behave like scenario 1 currently behaves. Scenario 3 will behave the same way as it does now except that many Serials which currently appear there will be linked to their parent Novel/Shortfiction Titles and "move up in the world" to scenario 1/2. The remaining unreprinted Serials will still appear in a separate section, which I think is our preferred behavior. We could conceivably display unreprinted Serials without a parent Novel/Shortfiction Title in the Novel section, but I think that would just mess up our chronological order and generally confuse things.

Next step: Are there additional technical considerations that we need to account for or are the steps outlined at the top of the section sufficient? If they are, then we can ask Marty, out display expert, to implement steps A and E first since they can be done ahead of time. In the meantime, we can start working on steps B and C. Once everything else is done, we can implement the change in step D and declare victory.

Does this sound reasonable? Ahasuerus 02:04, 21 July 2009 (UTC)

- "E" is easy. But I have a question about the ordering of all of this. If the intent is to allow SERIALs Starship Soldier (Part 1 of 2) and Starship Soldier (Part 2 of 2) to be direct variants of the NOVEL Starship Troopers, then the "lexical match" must FIRST be eliminated to get them to displayed as SERIALs at all. But removing the "lexical match" actually breaks the filtering done in the Summary Bibliography -- that makes a master list of serials and expects to be able to display just the matching entries out of that master list for each title as it goes along. Without that match, all SERIALs directly attributed to Robert A. Heinlein end up displayed under the first title on the page (Misfit). So at first blush, it seems we'd need the variants in place first. --MartyD 11:58, 22 July 2009 (UTC)

- That said, I have whipped up a modification to displayVariants and displaySerials that will do what I think is being asked for. I'm not convinced it will do the right thing if the serial's authorship is different, and I don't have time before leaving to test that out. But anyway, the change is to make displaySerials avoid the lexical match when working with a variant and to make displayVariants collect a list of serial-variants instead of displaying them and then have it do displaySerials after handling all of the normal variants. This makes a Magazine Appearances section after the variant titles. --MartyD 11:58, 22 July 2009 (UTC)

- Two observations on the above: (1) I think it would be useful to replace the separate Variant Title: tagging with a parallel single Variant Titles: pseudo-header a/la Magazine Appearances:. (2) I think it might look better to either make the pseudo-header be a non-list entry or to make the items under the pseudo-header be a sublist. --MartyD 11:58, 22 July 2009 (UTC)

- I am now officially on vacation and will be doing nothing more with this. Someone else will have to handle testing, discussion and screen shots. The change I am about to commit should work ok, but there may be variations I did not anticipate. Someone please fix it or undo it if it causes local developers problems. --MartyD 11:58, 22 July 2009 (UTC)

- I am in the process of testing these changes and so far they look good. The only unexpected side effect that I have found is with VTs, e.g. here is what the live server displays for Heinlein's "Gulf":

- Gulf (1949) [also as by Robert Heinlein ]

- Magazine/Anthology Appearances:

- Gulf (Part 1 of 2) (1949)

- Gulf (Part 2 of 2) (1949)

- Gulf (1949) [also as by Robert Heinlein ]

- while the new code displays it differently:

- Gulf (1949) also appeared as:

- Variant Title: Gulf (1949) [as by Robert Heinlein ]

- Magazine Appearances:

- Gulf (Part 1 of 2) (1949)

- Gulf (Part 2 of 2) (1949)

- Gulf (1949) also appeared as:

- This is not a deal breaker and we can address it when you come back from your vacation, so I plan to install the changes later today unless I find additional issues. Ahasuerus 17:44, 25 July 2009 (UTC)

- I cannot reproduce this. A local build of current code matches the production display using the Aug 1, 2009 backup. --MartyD 20:40, 2 August 2009 (UTC)

- This only happens if you make the two Serial records into VTs of the Shortfiction record, presumably because you now have three VTs as opposed to one. Not a big deal, but it would be nice to make the script count only "real" VTs unless there are performance implications. Ahasuerus 02:56, 3 August 2009 (UTC)

- Here is another issue which occurs when a novel/story has been serialized more than once:

- Beyond This Horizon (1942) also appeared as:

- Magazine Appearances:

- Beyond This Horizon (Complete Novel) (1952)

- Magazine Appearances:

- Beyond This Horizon (Part 1 of 2) (1942)

- Beyond This Horizon (Part 2 of 2) (1942)

- Beyond This Horizon (1942) also appeared as:

- This happens with the current state of the database where Beyond This Horizon (Complete Novel) is a VT of the Novel Title but Beyond This Horizon (Part 1 of 2) and Beyond This Horizon (Part 2 of 2) are done via lexical match.

- Yes. This is because the VT display was changed to display serial VTs as magazine appearances. So you've got one Magazine Appearances "section" that's due to VTs. The other section is the still-live non-VT handling, done later (after all of the VT handling). Once the separate non-serial appearances are made into VTs, there will be only one Magazine Appearances section. If one "parent" title has a combination of the two approaches, this is what you'll see. The only easy away around this I can think of is to convert all of the data first, then deploy code to address the resulting display issues. --MartyD 20:40, 2 August 2009 (UTC)

- Thanks, that makes sense and we can certainly wait until after the mass change. Ahasuerus 02:56, 3 August 2009 (UTC)

- There is also another, more serious problem. The original two part serialization in Astounding in 1942 appeared as by "Anson MacDonald", so we currently have the Anson MacDonald Serial Titles VT'd to "Robert A. Heinlein" and then the lexical match logic kicks in for display purposes. The 1952 reprint Serial also appeared as by "MacDonald" -- yes, 4 years after the book appeared as by Heinlein -- and we currently have the Serial record set up as a VT of the Novel record, which shows the pseudonym correctly.

- Following the approach outlined at the beginning of this section, I broke the 1942 VTs on my test system and deleted the two 1942 "Robert A. Heinlein" Serial Titles. I then made the two 1942 "Anson MacDonald" Serial Titles into VTs of the main "Robert A. Heinlein" Novel Title. Here is what I see now:

- Beyond This Horizon (1942) also appeared as:

- Magazine Appearances:

- Beyond This Horizon (Part 1 of 2) (1942)

- Beyond This Horizon (Part 2 of 2) (1942)

- Beyond This Horizon (Complete Novel) (1952)

- Beyond This Horizon (1942) also appeared as:

- There is no indication that the original 1942 Serial or the 1952 reprint Serial appeared as by Anson MacDonald. I think this warrants putting this change on the back burner until Marty comes back and has a chance to review all permutations. Ahasuerus 18:03, 25 July 2009 (UTC)

- This makes my brain hurt. I think I need a vacation.... I'll take a look at it and see what I can figure out. As I mentioned above, I don't know how well we'll be able to do this piecemeal, trying to get the new way working while having the old way also continue to work. It might be easier to make all of the VTs and then address the display issues that result. --MartyD 19:50, 2 August 2009 (UTC)

- No worries, I am sure we can live with slightly incorrect VT labels for a few weeks while we are sorting Serials out. And welcome back! :-) Ahasuerus 19:53, 2 August 2009 (UTC)

- This one's definitely a problem. Maybe I didn't set up the call to displaySerials correctly. I will have to look into it further. --MartyD 20:55, 2 August 2009 (UTC)

- Looks like I spoke too soon. Perhaps it is not actually a problem. This would essentially be a variant of a variant (the novel is the parent, the serial appearance by Heinlein the variant, the serial appearance by MacDonald the variant of the variant), which much/most of the display code does not handle. --MartyD 23:14, 2 August 2009 (UTC)

- "Variant of a Variant" should never happen -- there is a block of code in the Make Variant script to re-point all would-be grandchildren to the proper/new parent. Ahasuerus 23:51, 2 August 2009 (UTC)

- If VTs are to continue to go to a single, master parent, then this would have to be corrected by making the MacDonald VTs have the Heinlein novel as the parent instead of the Heinlein serial appearance. Does that make sense? --MartyD 23:14, 2 August 2009 (UTC)

- It certainly makes sense and it's exactly what I did locally. As per above, "I broke the 1942 VTs on my test system and deleted the two 1942 "Robert A. Heinlein" Serial Titles. I then made the two 1942 "Anson MacDonald" Serial Titles into VTs of the main "Robert A. Heinlein" Novel Title." The result was the "Anson MacDonald-less" display above, although I can't reproduce it at the moment since I have refreshed the database since then. Ahasuerus 23:51, 2 August 2009 (UTC)

- Ok, I was able to recreate this situation, and I've committed a fix. The Serials stuff wasn't trying to display authorship. Now it should (in the "Magazine Appearances" section) if the author is different or if the authorship is shared. Still makes my brain hurt, but I think that's the right thing for it to do. --MartyD 02:05, 3 August 2009 (UTC)

- Thanks, I'll take it for a test drive! :-) Ahasuerus 02:18, 3 August 2009 (UTC)

- (After testing) Looks good so far. One other thing that we may want to alter before the mass Serial change is the behavior of the Title display page. At this time, the live Title page for Gulf shows the following VT/Serial entries:

- Variant Titles:

- * Gulf (1949) - Robert Heinlein

- Serials:

- * Gulf (Part 1 of 2) (1949)

- * Gulf (Part 2 of 2) (1949)

- After converting the 2 Serial records to VTs on my local test system, I see the following behavior:

- Variant Titles:

- * Gulf (Part 1 of 2) (1949)

- * Gulf (Part 2 of 2) (1949)

- * Gulf (1949) - Robert Heinlein

- It would be nice to make the page appear the way it currently appears on the live server, with all the "true" VTs listed first and the Serials further down. Ahasuerus 02:56, 3 August 2009 (UTC)

- Whoops, I appear to have broken the Starship Soldier example while fixing Storylengths. :-( The background is basically that a lot of SERIAL records had Storylengths like "The Time Bender" for all "Axe and Dragon" SERIAL records, apparently a carry-over from ISFDB1 where variants were being recorded in the field. I've fixed most of those and used the data to create Serial/Novel connections where we didn't have them already. "Starship Solider" was one of those cases were we'd suddenly started displaying "Storylen: Starship Troopers". I think there are a few more to chase up - I'll need to look at the next backup though, to make sure we don't lose the data. E.g. we had no record that "The Hounds of Hell" was the magazine title for "(A) Plague of Demons" by Keith Laumer. BLongley 20:22, 2 August 2009 (UTC)

- The current situation is already unsatisfactory, e.g. see C. M. Kornbluth and look how "Mars Child", "Gravy Planet" and "Gladiator at Law" (with no dashes) show. You can at least find the NOVEL entry under the variant title and click on it to get the Serial records, but they're still displayed independently as well. For Poul Anderson, "Trader Team (1965) [SF] only appeared as: Variant Title: The Trouble Twisters (1965)" might be a bit misleading as it obviously did appear as a serial that way. But at least it isn't listed in Serials only. BLongley 20:22, 2 August 2009 (UTC)

(unindent) This section is awfully convoluted. To summari(s|z)e, as of 8-Aug-2009, the two remaining issues are:

- Lines -- if the lone non-serial title has a sole author-variant VT but some additional serial VTs, the display puts the author-variant VT on a separate, labeled line instead of using [also as by... ]

- Sequence -- on the Title page, we'd like VTs that are serials to be listed after VTs that are not serials.

If there's anything else I missed in the above, speak soon. --MartyD 10:49, 8 August 2009 (UTC)

- I have committed changes to address both of the above. The only tiny gotcha I noticed in the Title display is that it would be nice to label the group of serial VTs Serials: as is seen in the current display, but there's still a separate section for that (for those titles not yet converted to VTs). Rather than trying to combine them (which would have had sorting problems) or run the risk of having two -- possibly separated by Awards: -- sections, I figure we can wait on the conversion of the titles and then add a Serials: label in the VT portion of the display if we want it. --MartyD 11:35, 8 August 2009 (UTC)

- Great, thanks! I'll test the changes later today. Ahasuerus 14:40, 8 August 2009 (UTC)

- Everything looks OK, r2009-16 installed. Next I will create a Wiki page for the currently VT's Serial records (there are some 360 of them) so that we can start re-pointing them to the canonical Novel records manually.

Auto-conversion script

We will also need to create an SQL script to establish VT relationships between non-VT'd Serial Titles and lexically matched Novel Titles. The logic is fairly simple:

- The Serial Title is not VT'd

- The Serial's Authors match the Novel's authors exactly, although the order may be different

- The Serial title matches the Novel title up to the left parenthesis

I suspect it's beyond my meager SQL abilities, so we will need volunteers again. If it's not easy to do in pure SQL -- and the fact that the Authors can be out of order looks hard to do right -- we will need a Python script. Ahasuerus 04:35, 9 August 2009 (UTC)

- Actually, that's not quite right. They need to be made variants of the novel or its parent, if the novel is itself a variant. --MartyD 10:34, 9 August 2009 (UTC)

- Good point! Ahasuerus

- Here's a shot at a SQL match for the corresponding novel, from which we could construct an update (or dump the numbers into an update script):

select s.title_id, s.title_title, n.title_id, n.title_title, p.title_id, p.title_title

from titles s, titles n, titles p

where s.title_ttype = 'SERIAL' and s.title_parent = 0

and n.title_ttype = 'NOVEL' and n.title_title = trim(substring_index(s.title_title,'(',1))

and not exists

(select * from canonical_author sa where sa.title_id = s.title_id and not exists

(select * from canonical_author na where na.title_id = n.title_id and na.author_id = sa.author_id))

and p.title_id = if(n.title_parent = 0, n.title_id, n.title_parent)

order by s.title_title;

- The first two columns are the serial, the second two are the matching novel, the third two are what the variant should go to (the matching novel's parent, if it's a variant, otherwise the matching novel). I don't know if it's finding everything it should (there are 3,100+ serials that are not VTs). I tried allowing ANY author in common, and that came up with a 21 or so more. I know there are some 90+ non-variant VTs with no parens in the title:

select title_id, title_title from titles where title_ttype = 'SERIAL' and title_parent = 0 and title_title not like '%(%)%' order by title_title;

- and it should find those, but I see no such instances; perhaps the authors don't match. We may need to do all of the easy stuff and then take a look at what non-VT serials are left. --MartyD 12:03, 9 August 2009 (UTC)

- I certainly agree that we should do the easy stuff first and then see what's left and whether it needs to be automated or whether it can be done manually. I have also identified all Serial records that do not follow the "(Part ..."/"(Complete..." standard and we will need to handle them manually as well. Ahasuerus 18:28, 9 August 2009 (UTC)

USE INDEX error found

Earlier today I ran an Advanced Title Search for:

- author contains 'Zelazny'

- AND title contains 'This Immortal'

- OR title contains Conrad

At that point MySQL froze and I tried to kill the thread, which didn't work too well since MySQL insisted on cleaning up the temporary table(s), which took a long time. Eventually the thread went away, but there were still multiple locked threads and I had to bounce the MySQL server to get everything back to normal. Clearly, this is not acceptable since it can result in serious database degradation, so we need to fix the problem quickly or disable the affected part of the Advanced Search until the problem can be fixed.

Here is what I saw when I ran mysqladmin processlist --verbose:

| 1993630 | root | localhost | isfdb | Query | 202 | Copying to tmp table | select titles.* from titles,authors,canonical_author USE INDEX(authors) | where authors.author_canonical like '%zelazny%' and authors.author_id=canonical_author.author_id | and canonical_author.ca_status=1 and titles.title_id=canonical_author.title_id AND | titles.title_title like '%conrad%' OR titles.title_title like '%this immortal%' | order by titles.title_title limit 100

At first I thought that perhaps "USE INDEX" was causing this problem, but then I ran the select statement locally without it and the query still hung. Then I put parentheses around:

titles.title_title like '%conrad%' OR titles.title_title like '%this immortal%'

and the query returned what looked like the correct results in 2.89 seconds:

| 112736 | . . . And Call Me Conrad (Part 1 of 2) | NULL etc | 112737 | . . . And Call Me Conrad (Part 2 of 2) | NULL etc | 2493 | ...And Call Me Conrad | NULL etc | 994752 | A Word from Zelazny (. . . And Call Me Conrad (Part 1 of 2)) | NULL etc | 994753 | Synopsis of Part One (. . . And Call Me Conrad) | NULL etc | 186683 | This Immortal | NULL etc

Then I re-ran the query with USE INDEX (authors) and it returned the same results in 1.3 seconds. So, a question for all SQL experts: Will this fix, i.e. putting parentheses around search terms 2 and 3 work for all cases? If so, I can add them to all affected scripts and deploy the fix shortly. Ahasuerus 21:35, 29 August 2009 (UTC)

- The short answer is the constraints should be parenthesized to have the query behave as intended. The clauses are evaluated left-to-right. So A and B and C gets you everything matching all three constraints. A or B and C gets you everything matching either of the first two constraints and also matching the third -- "(A or B) and C" -- and works out ok. A and B or C gets you everything matching the first two constraints plus everything matching the third constraint -- "(A and B) or C" -- which is likely not what is meant and in this case is very unhappy on top of it. The interaction with the user interface is going to be tricky. Probably the best bet for now is to parenthesize all of the constraints as a group and further parenthesize any two adjoining OR constraints: ((A or B) and C) and (A and (B or C)). If there's only one constraint -- "(A)" or just a pair -- "(A and B)" or "((A or B))", the extra parens will not do any harm. I'm from the "you can never have too many parentheses" school of making sure the logic evaluator does exactly what I mean. --MartyD 22:21, 29 August 2009 (UTC)

- Makes sense, thanks! :) Now to figure out how to get the scripts to insert parentheses in the right places... Ahasuerus 22:32, 29 August 2009 (UTC)

Identifying Authors with non-VT titles who are labeled "Pseudonyms"

Feature request 2830507 reads:

- Some Author records are marked "Pseudonym" yet they have non-VT Titles associated with them, either because the VTs haven't been created yet or, occasionally, because the Author is a real person but his/her name has been used by another author, e.g. "V. C. Andrews".

- Currently any author marked as a "Pseudonym" has the word "Pseudonym" displayed in Author Search results. We want to enhance this behavior to make it clear that there are non-VT Title records (when applicable) for the Author. The exact wording is to be determined.

I have modified the three scripts that handle this logic, but I am having a little trouble with the SQL query. Here is what I have at the moment (adjusted for readability):

select count(*) from canonical_author as a,titles as t WHERE a.author_id ='ID' AND t.title_id = a.title_id AND t.title_parent = 0 AND (t.title_ttype !='REVIEW' OR (t.title_ttype ='REVIEW' AND a.ca_status = 1))

It seems to work OK and I have even committed it to CVS, but I am not sure whether it will handle Authors with non-VT Interview records. I spent about an hour trying to figure it out earlier today, but all I got was a headache, so I wonder if perhaps some SQL-savvy developer may be able to improve the code. TIA! Ahasuerus 02:08, 17 August 2009 (UTC)

- What exactly are you trying to have the query figure out? And are you worrying about things the author wrote or things of which the author is the subject? --MartyD 10:45, 17 August 2009 (UTC)

- I am trying to determine whether an Author who is labeled "pseudonym" has any non-VT Titles. Normally, a pseudonymous Author will have all of his/her Titles VT'd to the canonical name -- or, for joint pseudonyms and house names, to the canonical names -- but there are three exceptions to the rule:

- The Author was a real writer at one point, but then his identity was taken over by another writer, e.g. V. C. Andrews. This is fairly rare, but it happens.

- The Author has been marked as a pseudonym in error -- see Robert A. Heinlein and Henry Kuttner.

- Neither of the first two scenarios applies, yet the Author has non-VT Titles that haven't been set up as VT's. Most of the time it's an oversight, but sometimes we don't know who is responsible for a particular Title attributed to a house name, so we don't know what canonical name to use.

- What this affects is how the Author's Summary Bibliography page is displayed. When there are no non-VT Titles, the "Pseudonym" line appears in big bold letter. When there are non-VT Titles, the "Pseudonym" line doesn't appear and the non-VT Titles are displayed instead.

- When a user runs an Author search (regular or Advanced), he gets a list of Authors and one of the columns tells him whether each Author is marked as a pseudonym. If an Author record is marked that way and there are no non-VT Titles associated with it, then we display the word "Pseudonym". However, if non-VT Titles exist, then we want to display something else in this column, e.g. "Has pseud. titles", which will tell the user that the Author record is either unusual (V. C. Andrews, a house name, etc) or, more likely, not fully processed. Hence it's important to be able to tell whether a pseudonymous Author record has non-VT Titles associated with it.

- My first thought was to use "biblio.py", which has this logic already built in. And it did work quite well for regular searches, but then I realized that biblio.py is not available to the Advanced Search scripts since it's not a "common" module and is not copied to the "edit" sub-directory. I don't suppose there is a way to load a module from another sub-directory, is there?

- Well, you could, but in the structure that's set up, the proper way to do it is to put it into a new or existing module in common. --MartyD 10:32, 18 August 2009 (UTC)

- My next approach was to write a custom SQL query and check for non-VT Titles associated with the Author. That worked well for regular Titles, but then I found a pseudonymous Author who has written lots of non-VT Reviews and had to enhance the query to account for Reviews. That worked, but the query became substantially more complex. Finally, I realized that I would face the same problem with authors who have non-VT'd Interviews, but by that point my brain wasn't functioning well and I was making no progress, so I decided to ask for help :) Ahasuerus 02:04, 18 August 2009 (UTC)

- If you're only worried about authorship of any non-VT title, then I'm pretty sure all you need is

select 1 from dual where exists (select * from canonical_author a, titles t WHERE a.author_id ='ID' AND t.title_id = a.title_id AND t.title_parent = 0 AND a.ca_status = 1)

- The only time REVIEW or INTERVIEW come into play is if you're also interested in cases where the pseudonym is somehow the 'viewee. If you want to include those as well, omit the "AND a.ca_status = 1". --MartyD 10:32, 18 August 2009 (UTC)

- Thanks, that worked! I must have misinterpreted the table structure when I went digging after my first experiment didn't yield the results that I had expected. I blame gremlins, leprechauns and, of course, the mensheviks. Ahasuerus 11:16, 18 August 2009 (UTC)

- Those questions asked, it looks to me like REVIEW and INTERVIEW are treated the same way in the code, so if you need a REVIEW-specific restriction, you also need a similar restriction for INTERVIEW. I believe ca_status = 1 for authorship (w/ca_status = 2 for interviewee and ca_status = 3 for reviewee). So if you're just worried about authorship, I think you should use "AND a.ca_status = 1" instead of the REVIEW-related clause you have; no need to restrict it by title type. As an aside, if you don't need a count, using

select 1 from dual where exists (select * from canonical_author ...)

has the potential for better performance. --MartyD 10:45, 17 August 2009 (UTC)

- Thanks, I will give it a shot! Ahasuerus 02:04, 18 August 2009 (UTC)

Database freeze

MySQL froze earlier today while executing the following query (output from mysqladmin processlist):

Query | 748 | Copying to tmp table | select titles.* from titles,authors,canonical_author USE INDEX(authors) where ( authors.author_canon |

I believe I saw something similar when the database froze the last time, but I didn't take a snapshot. I can test various permutations of Advanced Author Search later tonight, but I wonder if our SQL-savvy folks could take a look at the code and see if there is anything obviously wrong with it?

P.S. Is there a better MySQL command to determine what a suspect process is doing? Ahasuerus 16:36, 12 August 2009 (UTC)

- Was this your local server or the live one? BLongley 18:22, 12 August 2009 (UTC)

- It was the live server. We had a couple dozen queries locked out while it was trying to perform this "copy to tmp file" operation. Ahasuerus 18:38, 12 August 2009 (UTC)

- And do you have the rest of the query? Or was this using the ISFDB software and mysqladmin is reporting as much as it can display, but doesn't know which bit of Python it's from? BLongley 18:22, 12 August 2009 (UTC)